|

It helps to know if a drive to the store is going to take 12 minutes or 2 hours as I am trying to schedule my errands for the week.

Likewise, when planning on working through a learning module, task, or activity it helps to know how long (about) it will take to complete. The dedication to "time on task" is easier to make when I, as a learner, know how much time is going to be required. Like my trip to the store, it will have a big influence on how I plan my time and how I prepare myself. This is why I add an estimated time to complete (ETTC) to actionable items in my courses. I have found that ETTC helps learners overcome their procrastinations by eliminating some of the ambiguity of what time a commitment to action entails. Considering the messy schedules they often have to navigate, its useful for to know if they can squeeze a chapter reading in before their meeting that starts in 30 minutes. If they don't know that it only takes 10-15 minutes (on average) to read the chapter, they likely will not even bother. ETTC enables them to pace and schedule their engagement effectively. In addition, an ETTC provides them with a measure to compare their own time on task to. If it is taking a hour to complete something that should take 10-15 minutes this would be a red flag that something is amiss. Did I mention that ETTC should be a range? It should be a range. Because you are bad at estimating. If you know the time to complete then its just TTC. Which is fine too. We've seen a commitment to communicating time to complete work well elsewhere; from cooking recipes to time stamps on videos. ETTC is useful information for making a decision about when or whether to engage with something. Its also useful to know that this recipe should only take 20-30 minutes, but I'm on day two, something is wrong. I'm not going to spend another two days making Pasta de Pepe but I will watch an informative 3 minute video. Time is a cost and learners want to know how much they are paying. note1: ETTC helps schedule, pace, prepare, compare

postscript1: Don't get too macro with your ETTCs. Try to provide an ETTC to each actionable item. postscript2: Use learner feedback to improve your ETTCs.

0 Comments

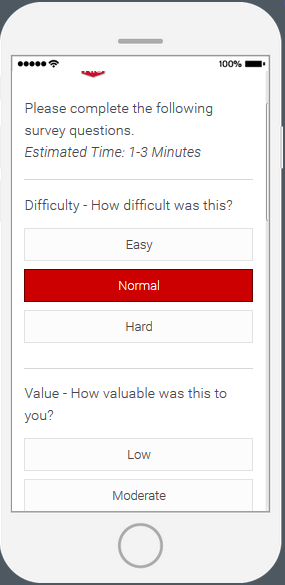

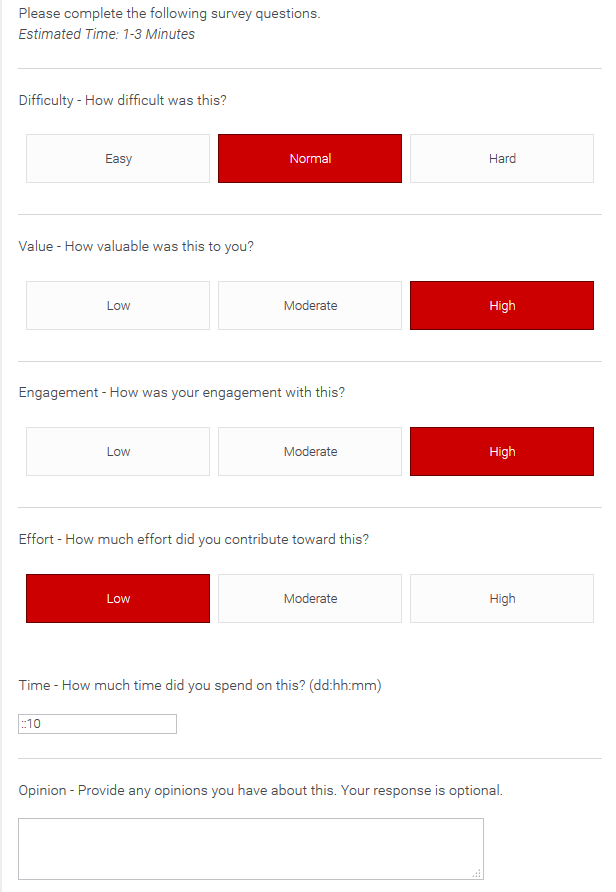

DifficultyEasy - Normal - Hard The difficulty scale reports learners' perception of personal challenge or required skill to meet the target's requirement. ValueLow - Moderate - High The value scale reports learners' perception of usefulness that the target provided them in exchange for their time and efforts. EngagementLow - Moderate - High The engagement scale reports learners' perception of attention, motivation, and interest toward the target. EffortLow - Moderate - High The effort scale reports learners' perceptions of their personal commitment toward the target. Effort is a hedge against the other indicators - which reflect more on design and not external motivators. Timedd:hh:mm - modifiable Learners' report of the time spent on task, or exposure to the target. OpinionOptional essay or short answer Opinion is optional written feedback. Can be a qualitative summary, highlights, lowlights, suggestions to improve, etc. USES I've used the information from this survey in two ways: (1) to provide formative feedback that allows me to be responsive to learner cohorts: do they need more remedial instruction? are they taking too long on the readings? did they enjoy this activity? These are elements that I can use to tune instruction in motion: add more activities like this, divide the remaining readings, post remedial content, etc. (2) To provide summative feedback that will inform the next design versions. If 80% of my students thought the readings were too hard, maybe it's time to reevaluate or replace the text, or provide additional support. I can also track the feedback throughout the course and across student groups. There are several useful insights that this simple instrument can yield. Learners may report that an activity was difficult, but very engaging and valuable; or that an assignment was valuable, but it took too long and wasn't engaging. This might encourage the development of like activities, or spur the redesign of an assignment, respectively. Importantly, it also gives learners the opportunity to provide direct feedback on what might be improved (or what exactly was so great) through the opinion field - so they can tell you exactly what you did wrong and you can fix it. LEARNER REFLECTION I've found that this tool also helps learners to reflect on their experiences, even if briefly. This is especially useful if DVEETO is used with mid-level experiences like online modules or multi-tiered assignments or activities, since they have to recall their experiences and own sense of commitment (effort) in sum to provide a response. Since reflection tends to be good for long-term retention, this tool can be a cheap and easy way to promote it. LIMITATIONS This is not a research-grade instrument. It's not meant to be. It's intended to provide useful feedback for course development and instruction that learners will actually complete. It allows me to compare what my design expectations were against their real experiences and make informed changes. I would not use this to justify grand declarations about how a particular course element improved student learning outcomes. The number of possible responses is intentionally limited (3) and I advise against adding more options. More options take longer and require more thinking. Long, reflective thinking is fine for end-of-course/training surveys. That's not what this is for. No one wants to spend 10 minutes on a survey covering a 5 minute video. That said, you may want to modify the questions. MODIFICATIONS As-is, this tool may not be for you. I am working primarily in a higher-education environment teaching undergraduates and training faculty. So you may find that you don't need some of these fields or that there is one that is missing that would be useful to you and your context. I think these are fairly universal, but all six questions are not always necessary. In fact, I actually recommend dropping as may questions as you can - but no more then you can't. If you are using the survey on the above-mentioned 5 minute video, do you really need to know how long your learners spent on it? I'm going to guess it was about 5 minutes. Again, this isn't some rigorously tested research instrument, so mix-and-match at your discretion. Just don't make it an hour long because no one is going to take the time to complete it, unless you make them, and then they will hate you and your dumb survey. WHAT ABOUT ASSESSMENT? What about it? This is not an assessment tool, its a feedback tool. You aren't going to use it to find out if learners actually learned the content. That's a separate issue. However, it can be attached to items such as tests or assignments to inform you of learners perceptions of them. It would be interesting if say, your learners report that a test was easy but end up failing miserably. There is probably something there for you to fix. Its also worth noting that assessment by itself is not the best way to judge the effectiveness of your instructional design. Learners may simply be highly motivated to get through your awful course because they really, really want to be a doctor, or engineer, or to be in compliance with your company's new policy, or so their parents don't take away their video games. Don't be fooled that performance alone is necessarily a good indicator of effective design. Ask your learners. |

AuthorCameron Wills is another guy with ideas and opinions. Half-baked concepts with incremental improvements go here. Archives

January 2022

Categories |

Photo used under Creative Commons from wuestenigel

RSS Feed

RSS Feed